Connection String to Azure OpenAI

-

This article explains how to define a connection string to the Azure OpenAI Service,

enabling RavenDB to use Azure OpenAI models for Embeddings generation tasks, Gen AI tasks, and AI agents. -

In this article:

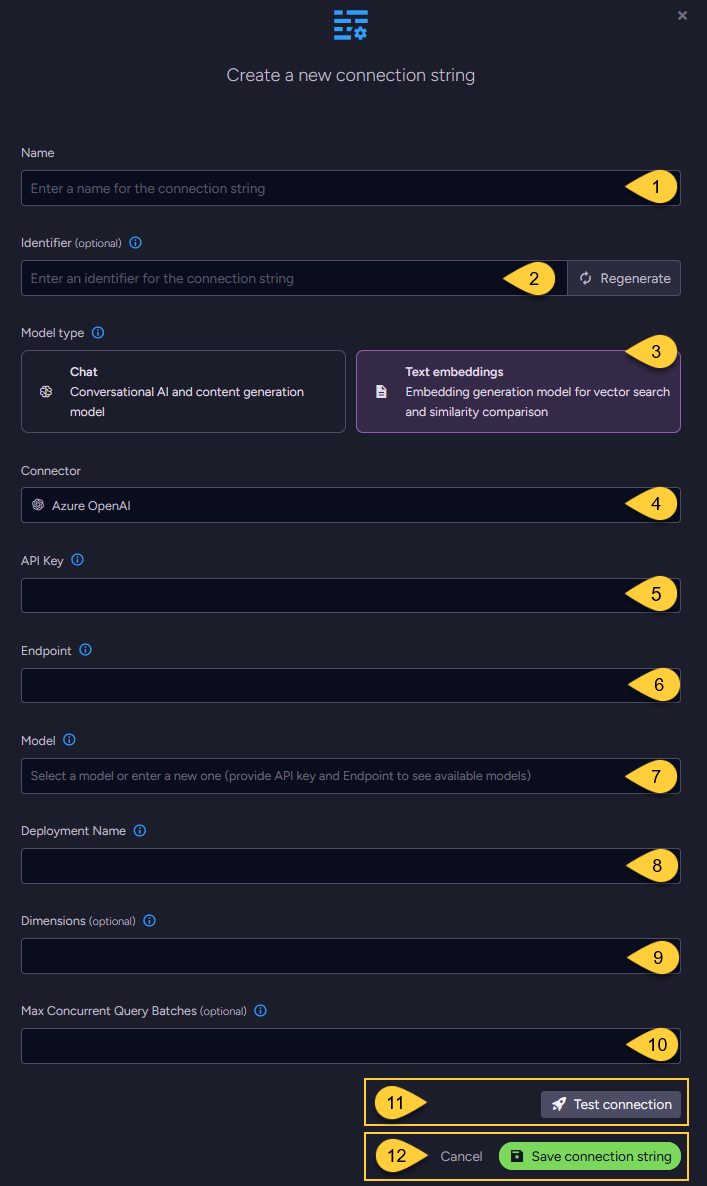

Define the connection string - from Studio

Configuring a text embedding model

-

Name

Enter a name for this connection string. -

Identifier (optional)

Enter an identifier for this connection string.

Learn more about the identifier in the connection string identifier section. -

Model Type

Select "Text Embeddings". -

Connector

Select Azure OpenAI from the dropdown menu. -

API key

Enter the API key used to authenticate requests to the Azure OpenAI service. -

Endpoint

Enter the base URL of your Azure OpenAI resource. -

Model

Select or enter an Azure OpenAI text embedding model from the dropdown list or enter a new one. -

Deployment name

Specify the unique identifier assigned to your model deployment in your Azure environment. -

Dimensions (optional)

- Specify the number of dimensions for the output embeddings.

Supported only by text-embedding-3 and later models. - If not specified, the model's default dimensionality is used.

- Specify the number of dimensions for the output embeddings.

-

Max concurrent query batches: (optional)

- When making vector search queries, the content of the search terms must also be converted to embeddings to compare them against the stored vectors.

Requests to generate such query embeddings via the AI provider are sent in batches. - This parameter defines the maximum number of these batches that can be processed concurrently.

You can set a default value using the Ai.Embeddings.MaxConcurrentBatches configuration key.

- When making vector search queries, the content of the search terms must also be converted to embeddings to compare them against the stored vectors.

-

Click Test Connection to confirm the connection string is set up correctly.

-

Click Save to store the connection string or Cancel to discard changes.

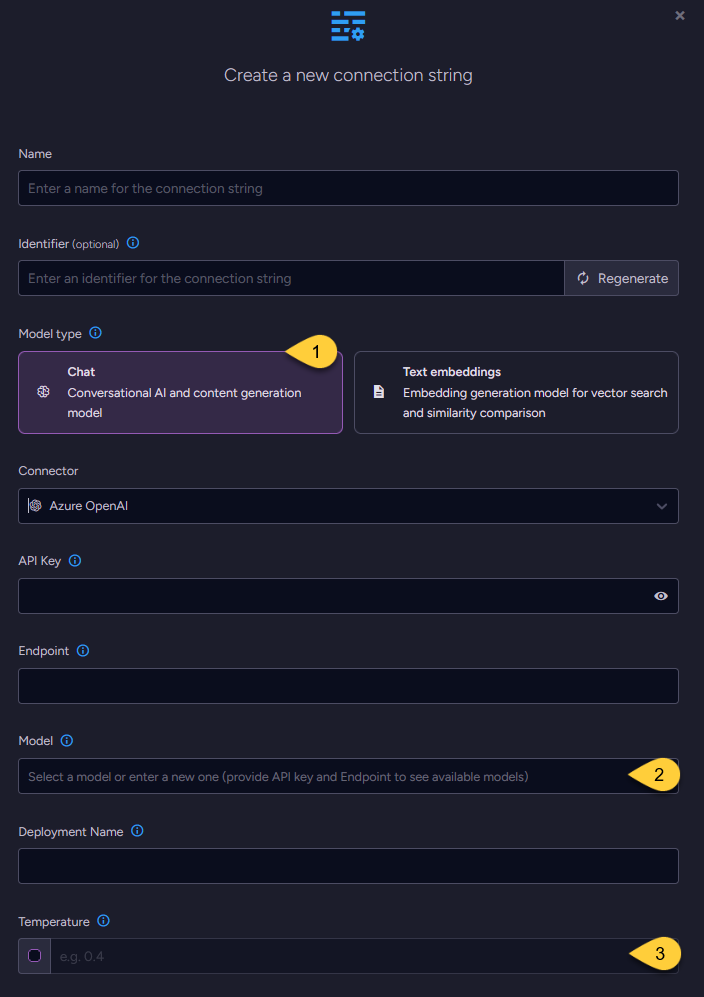

Configuring a chat model

-

When configuring a chat model, the UI displays the same base fields as those used for text embedding models,

including the connection string Name, optional Identifier, API Key, Endpoint, Deployment Name, and Model name. -

One additional setting is specific to chat models: Temperature.

-

Model Type

Select "Chat". -

Model

Enter the name of the Azure OpenAI model to use for chat completions. -

Temperature (optional)

The temperature setting controls the randomness and creativity of the model’s output.

Valid values typically range from0.0to2.0:- Higher values (e.g.,

1.0or above) produce more diverse and creative responses. - Lower values (e.g.,

0.2) result in more focused, consistent, and deterministic output. - If not explicitly set, Azure OpenAI uses a default temperature of

1.0.

See Azure OpenAI chat completions parameters.

- Higher values (e.g.,

Define the connection string - from the Client API

- Connection_string_for_text_embedding_model

- Connection_string_for_chat_model

using (var store = new DocumentStore())

{

// Define the connection string to Azure OpenAI

var connectionString = new AiConnectionString

{

// Connection string Name & Identifier

Name = "ConnectionStringToAzureOpenAI",

Identifier = "identifier-to-the-connection-string", // optional

// Model type

ModelType = AiModelType.TextEmbeddings,

// Azure OpenAI connection settings

AzureOpenAiSettings = new AzureOpenAiSettings

{

ApiKey = "your-api-key",

Endpoint = "https://your-resource-name.openai.azure.com",

// Name of text embedding model to use

Model = "text-embedding-3-small",

DeploymentName = "your-deployment-name",

// Optionally, override the default maximum number of query embedding batches

// that can be processed concurrently

EmbeddingsMaxConcurrentBatches = 10

}

};

// Deploy the connection string to the server

var putConnectionStringOp =

new PutConnectionStringOperation<AiConnectionString>(connectionString);

var putConnectionStringResult = store.Maintenance.Send(putConnectionStringOp);

}

using (var store = new DocumentStore())

{

// Define the connection string to Azure OpenAI

var connectionString = new AiConnectionString

{

// Connection string Name & Identifier

Name = "ConnectionStringToAzureOpenAI",

Identifier = "identifier-to-the-connection-string", // optional

// Model type

ModelType = AiModelType.Chat,

// Azure OpenAI connection settings

AzureOpenAiSettings = new AzureOpenAiSettings

{

ApiKey = "your-api-key",

Endpoint = "https://your-resource-name.openai.azure.com",

// Name of chat model to use

Model = "gpt-4o-mini",

DeploymentName = "your-deployment-name",

// Optionally, set the model's temperature

Temperature = 0.4

}

};

// Deploy the connection string to the server

var putConnectionStringOp =

new PutConnectionStringOperation<AiConnectionString>(connectionString);

var putConnectionStringResult = store.Maintenance.Send(putConnectionStringOp);

}

Syntax

public class AiConnectionString

{

public string Name { get; set; }

public string Identifier { get; set; }

public AiModelType ModelType { get; set; }

public AzureOpenAiSettings AzureOpenAiSettings { get; set; }

}

public class AzureOpenAiSettings : AbstractAiSettings

{

public string ApiKey { get; set; }

public string Endpoint { get; set; }

public string Model { get; set; }

public string DeploymentName { get; set; }

// Relevant only for TEXT EMBEDDING models:

// Specifies the number of dimensions in the generated embedding vectors.

public int? Dimensions { get; set; }

// Relevant only for CHAT models:

// Controls the randomness and creativity of the model’s output.

// Higher values (e.g., 1.0 or above) produce more diverse and creative responses.

// Lower values (e.g., 0.2) result in more focused and deterministic output.

// If set to 'null', the temperature is not sent and the model's default will be used.

public double? Temperature { get; set; }

}

public class AbstractAiSettings

{

public int? EmbeddingsMaxConcurrentBatches { get; set; }

}