AI Agents Integration: Studio

Create AI Agent

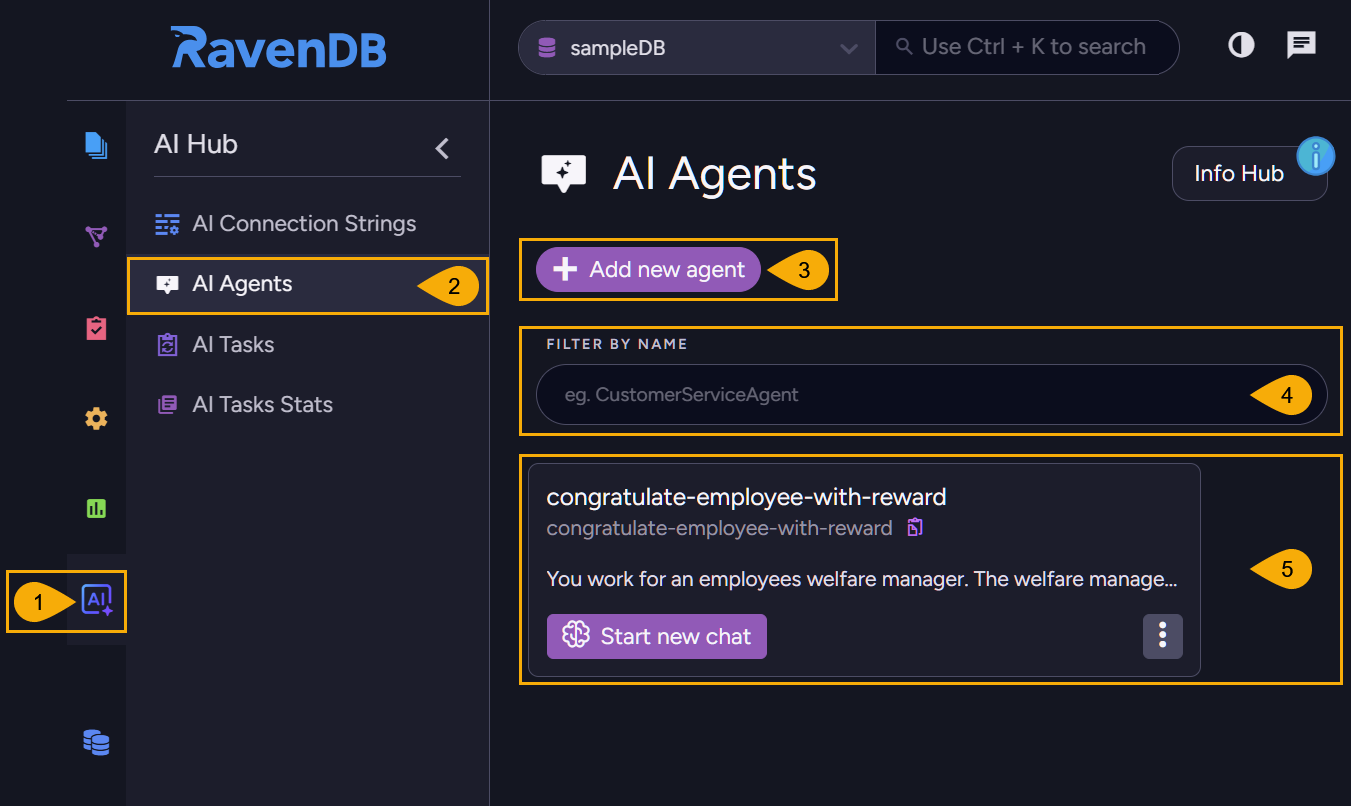

To create an AI agent, open AI hub > AI Agents and click Add new agent.

-

AI Hub

Click to open the AI Hub view.

Use this view to handle AI connection strings and tasks, and to view task statistics. -

AI Agents

Click to open the AI Agents view.

Use this view to list, configure, or remove your agents. -

Add new agent

Click to add an AI agent.The Create AI Agent dialog will open, allowing you to define and test your agent.

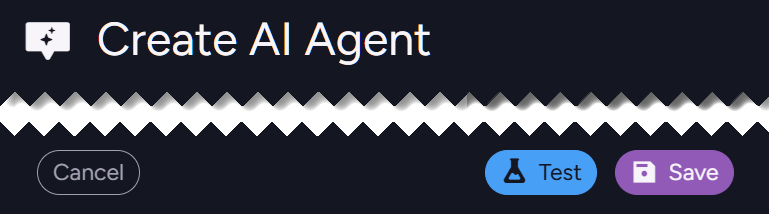

Use the buttons at the bottom bar to Cancel, Save, or Test your changes.

-

Filter by name

When multiple agents are created, you can filter them by a string you enter here. -

Defined agent

After defining an agent it is listed in this view, allowing you to run, edit, or remove the agent.

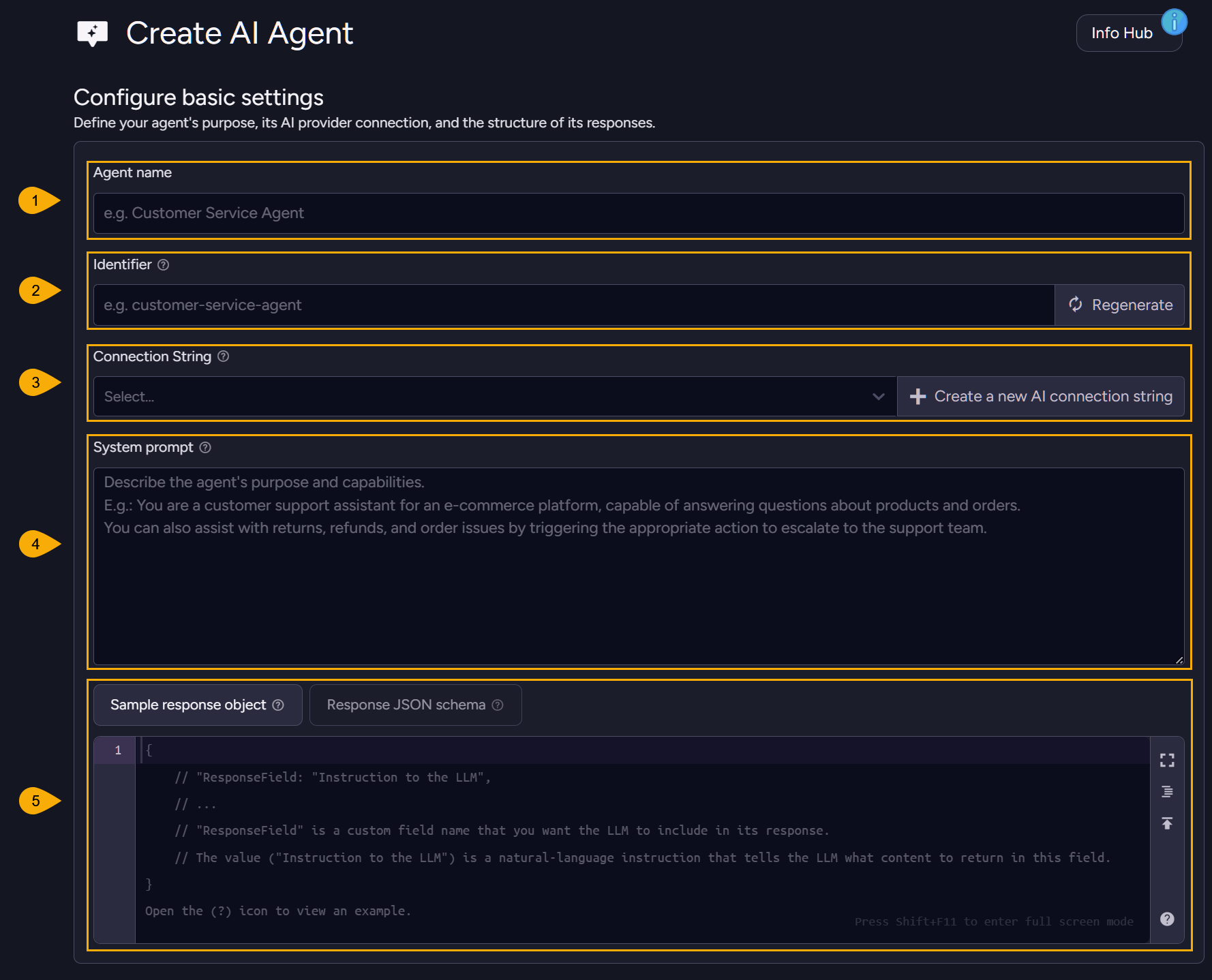

Configure basic settings

-

Agent name

Give your agent a meaningful name that would clarify its purpose even when multiple agents are defined.

E.g., CustomerSupportAgent -

Identifier

Give your agent a unique identifier.

You can provide the identifier yourself, or click Regenerate to create it automatically. -

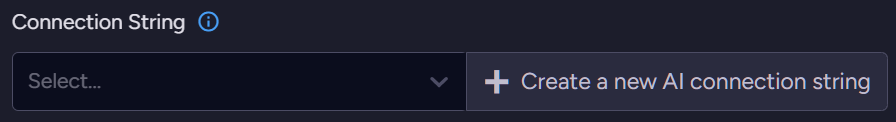

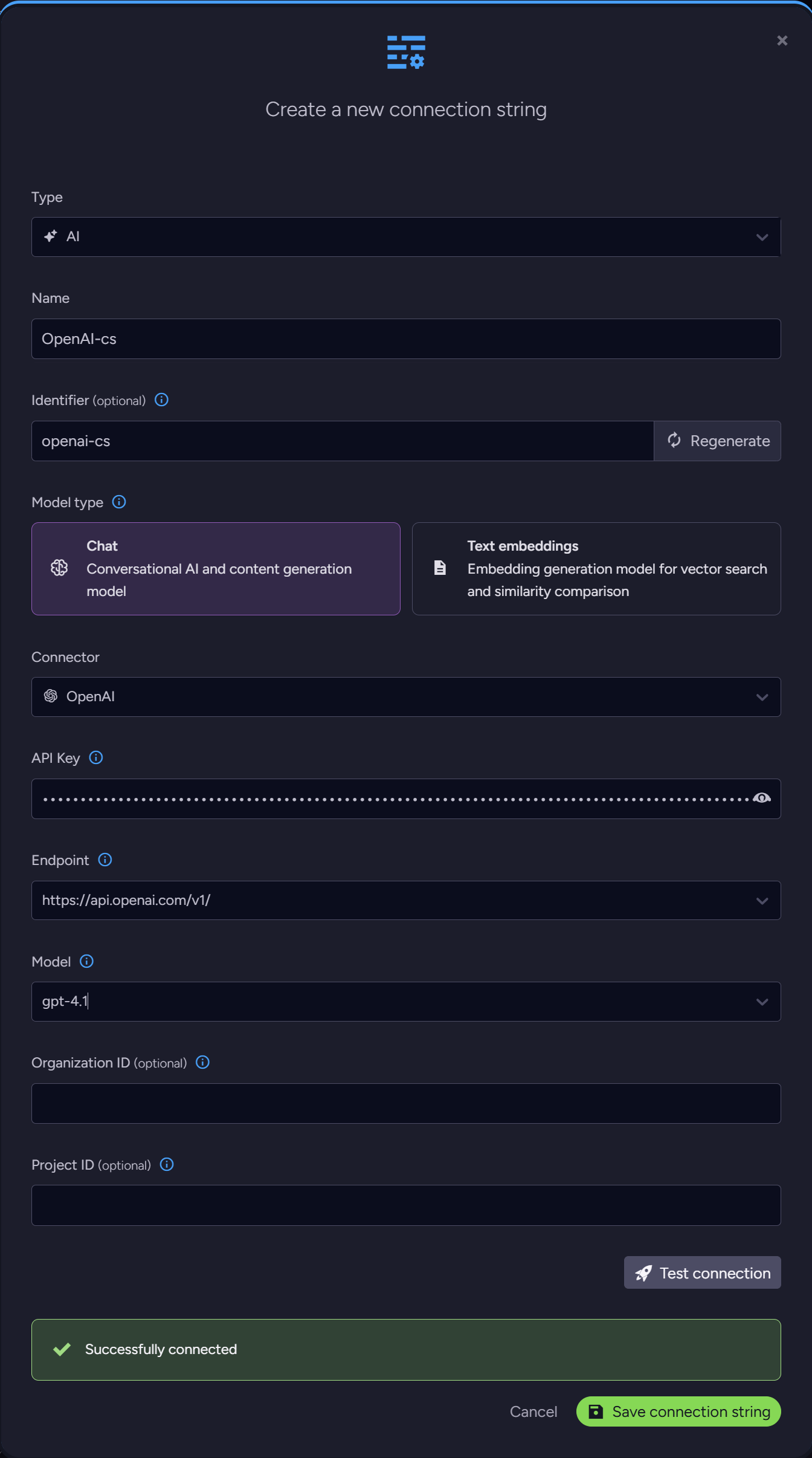

Connection String

Select an existing connection string that the agent will use to connect your LLM of choice,

or click Create a new AI connection string to define a new string.

Your agent can use a local LLM like Ollama, or an external model like OpenAI.

-

System prompt

Provide a prompt that determines the LLM's role and purpose.

When your agent connects the LLM it will pass it your prompt, your sample object or schema to shape its response by, your agent tools, and if you connect an existing conversation - the entire conversaion history. -

Sample response object and Response JSON schema

Click "Sample response object" or "response JSON schema" to switch between these two tabs.-

Provide a JSON-based sample response object that defines the layout of the AI model's reply.

Design it so LLM responses would include the data you need and be easy to process by the client.Behind the scenes, RavenDB will translate your sample object to a JSON schema format before sending it to the LLM. If you prefer it, you can skip the translation phase by defining the response JSON schema yourself (see below).

If you define both a sample response object and an explicit schema, only the schema will be used.

-

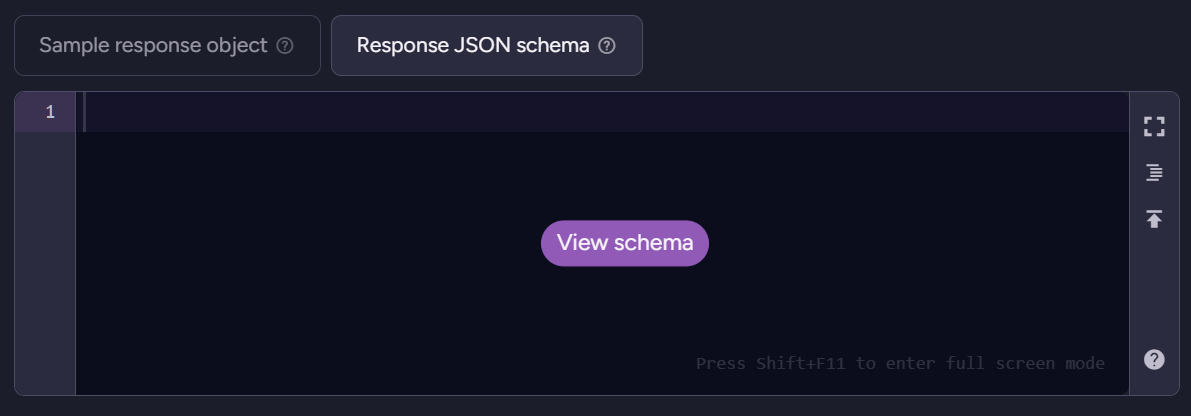

Response JSON schema

Enter a JSON schema that defines the layout of LLM responses.If you design a sample object and then open the schema tab, you will be given the option to translate the sample object to a scheme by clicking the "View schema" button.

If you define both a sample response object and an explicit schema, only the schema will be used.

-

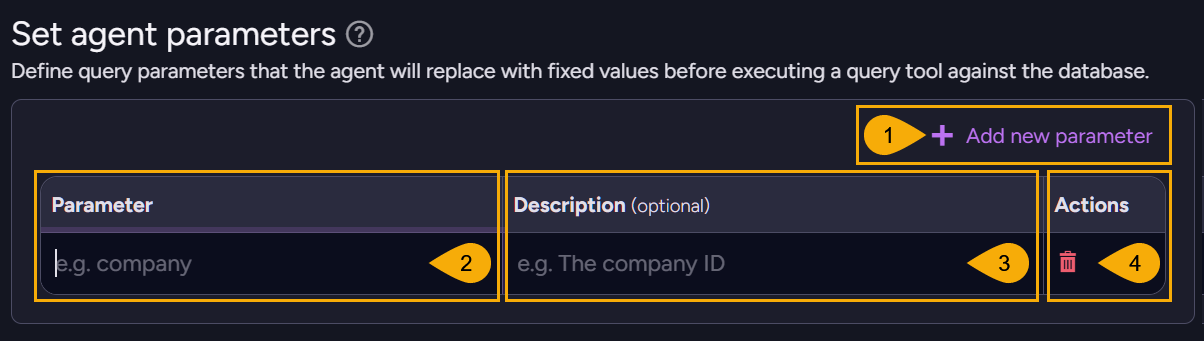

Set agent parameters

Define agent parameters.

Values for agent parameters you define will be provided by the client when it runs the agent.

When the agent runs a query tool that includes an agent parameter, the value provided by

the client will be placed in the query instead of the parameter name.

-

Add new parameter

Click to add an agent parameter. -

Name

Enter agent parameter name. -

Description

Explain the parameter so the LLM will understand its role. -

Remove parameter

Removea defined parameter from the list.

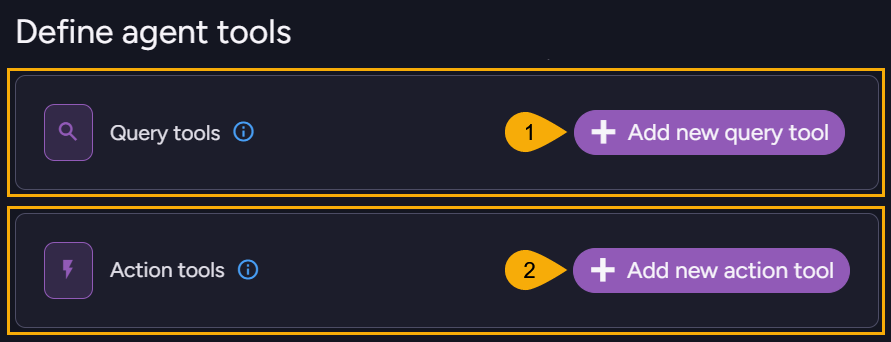

Define agent tools

Define Query and Action agent tools.

When the agent is executed, it will pass the LLM the list of tools you define here.

The LLM will then be able to trigger the execution of these tools to retrieve needed

data from the database or apply changes like the creation or the modification of documents.

Agent tools are not applied directly by the LLM, but triggered by the LLM and applied

by the agent or by the client.

When the LLM needs to apply a query tool, it will notify the agent which will execute

the query and pass its results to the LLM in the sample object you define for the tool.

When the LLM needs to apply an action tool, it will request the agent to run it.

-

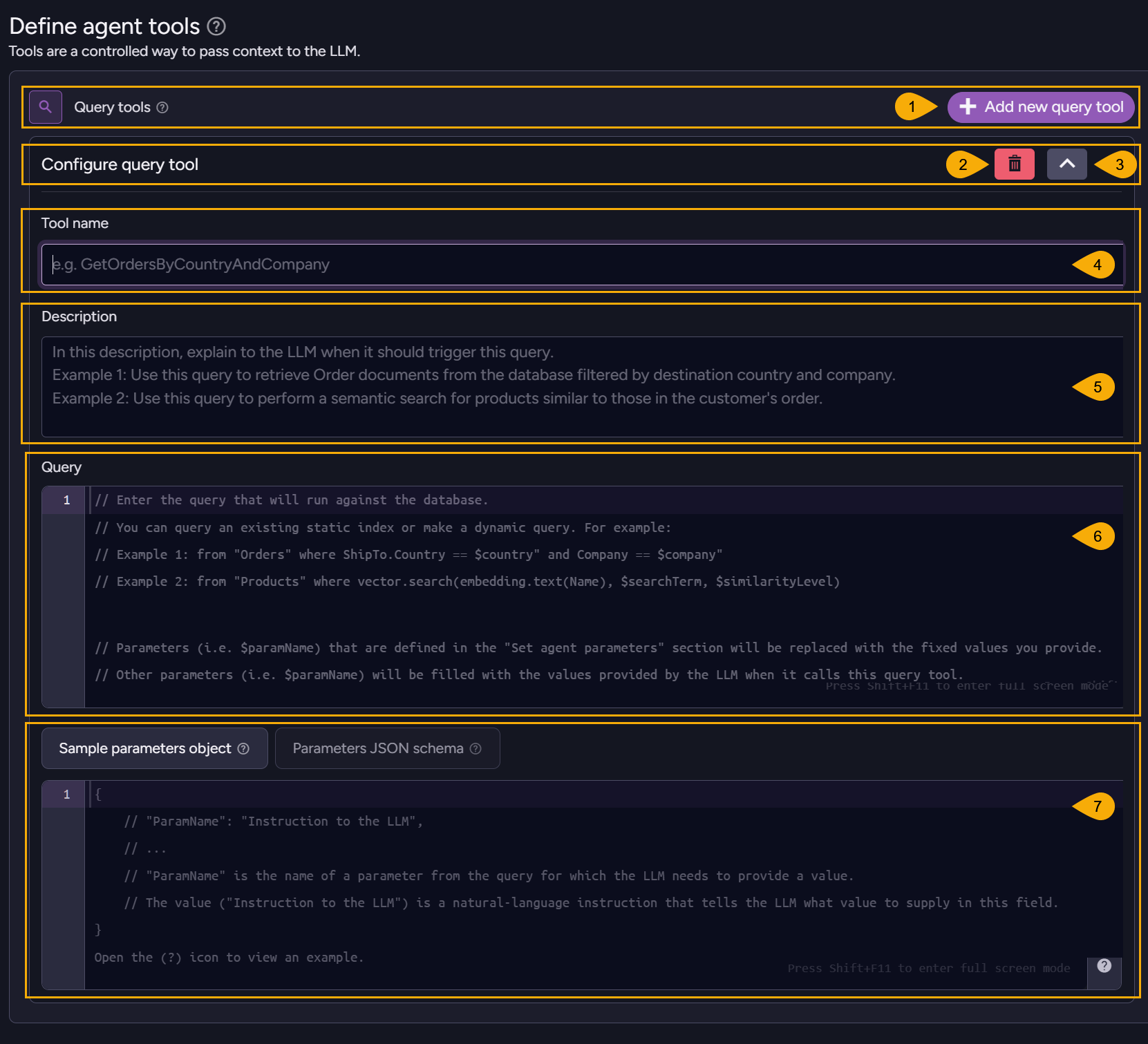

Query tools

Click to add a new query tool. -

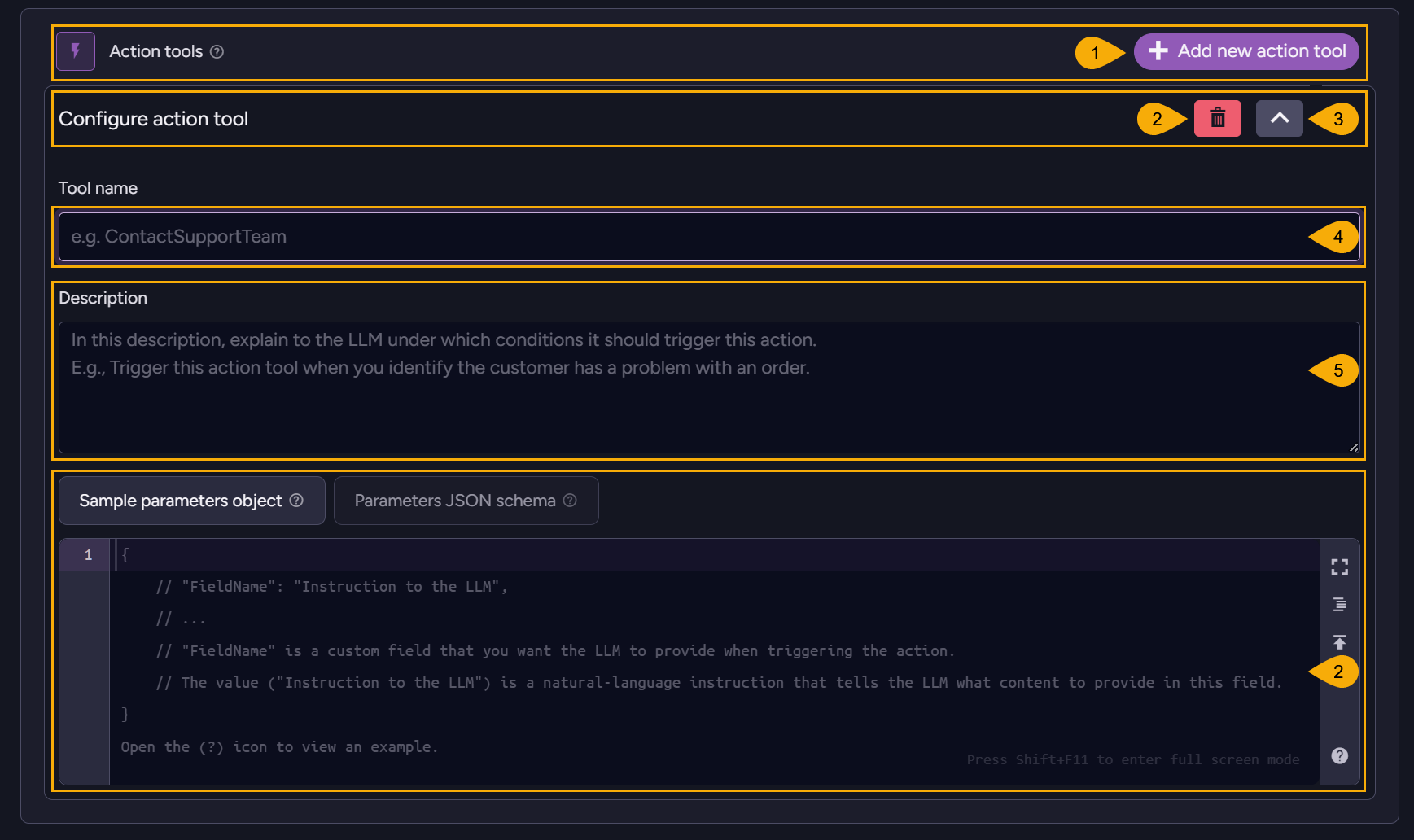

Action tools

Click to add a new action tool.

Add new query tool

-

Add new query tool

-

Cancel

Cancel your changes or Save the query tool when it is ready. -

Expandq/Colllapse tool

-

Tool name

Give your query tool a meaningful name. -

Description

Write a description that will explain to the LLM in natural language what the attached query can be used for.

E.g.,apply this query when you need to retrieve all the companies that reside in a certain country -

Query

Write the query that the agent will run when the LLM requests it to use this tool. -

Sample parameters object and Parameters JSON schema

Click "Sample parameters object" or "Parameters JSON schema" to switch between the two tabs.

Define an object with parameters that the LLM can set and request the agent to use in the query.

If you define both a sample response object and a schema, only the schema will be used.

Add new action tool

-

Add new query tool

-

Cancel

Cancel your changes or Save the action tool when it is ready. -

Expandq/Colllapse tool

-

Tool name

Give your action tool a meaningful name. -

Description

Write a description that explains to the LLM in natural language when this action tool should be applied.

E.g.,apply this action tool when you need to create a new summary document -

Sample parameters object and Parameters JSON schema

Click "Sample parameters object" or "Parameters JSON schema" to switch between the two tabs.

Define an object that the agent will fill with data needed for the client's action.If you define both a sample response object and a schema, only the schema will be used.

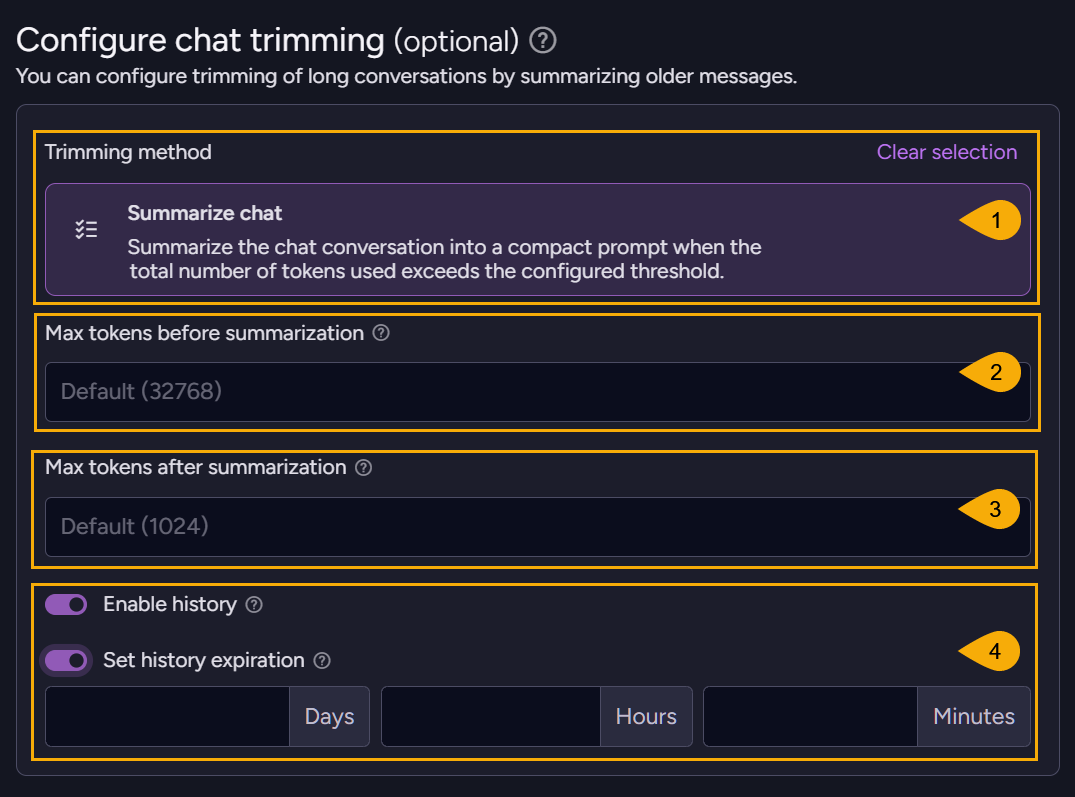

Configure chat trimming

The LLM keeps no record of previous conversations it conducted.

To allow a continuous conversation, the agent sends the LLM the entire conversation history

when a chat is started. To minimize these transfers, you can configure the agent to summarize

messages before sending the history to the LLM.

-

Summarize chat

Use this option to limit the size of the conversation history. If its size breaches this limit, chat history will be summarized before it is sent to the LLM. -

Max tokens Before summarization

If the conversation contains a total number of tokens larger than the limit you set here, the conversation will be summarized. -

Max tokens After summarization

Set the maximum number of tokens that will be left in the conversation after it is summarized.

Messages exceeding the set limit will be removed, starting with the oldest. -

History

- Enable history

When history is enabled the chat sent to the LLM will be summarized, but copies of the original chats will be kept in a dedicated documents in the@conversations-historycollection. - Set history expiration

When this option is enabled, conversations will be deleted from the@conversations-historycollection when the their age exceeds the the period you set in the time boxes.

- Enable history

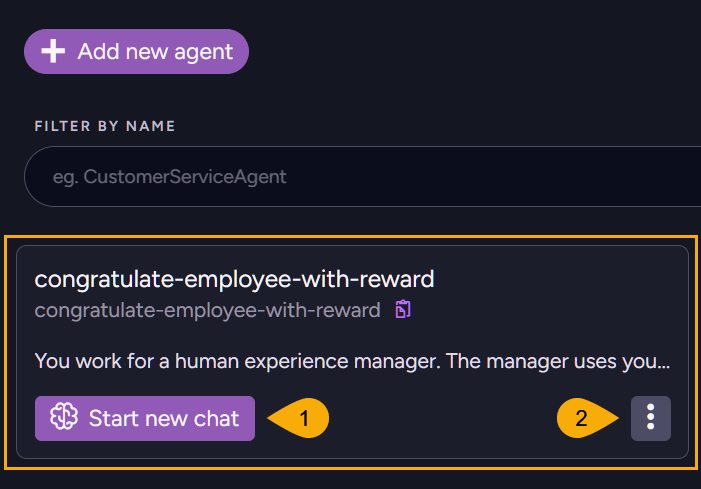

Save and Run your agent

When you're done configuring your agent, save it using the save button at the bottom.

You will find your agent in the main AI Agents view, where you can run or edit it.

-

Start new chat

Click to run your agent. -

Edit agent

Click to edit the agent.

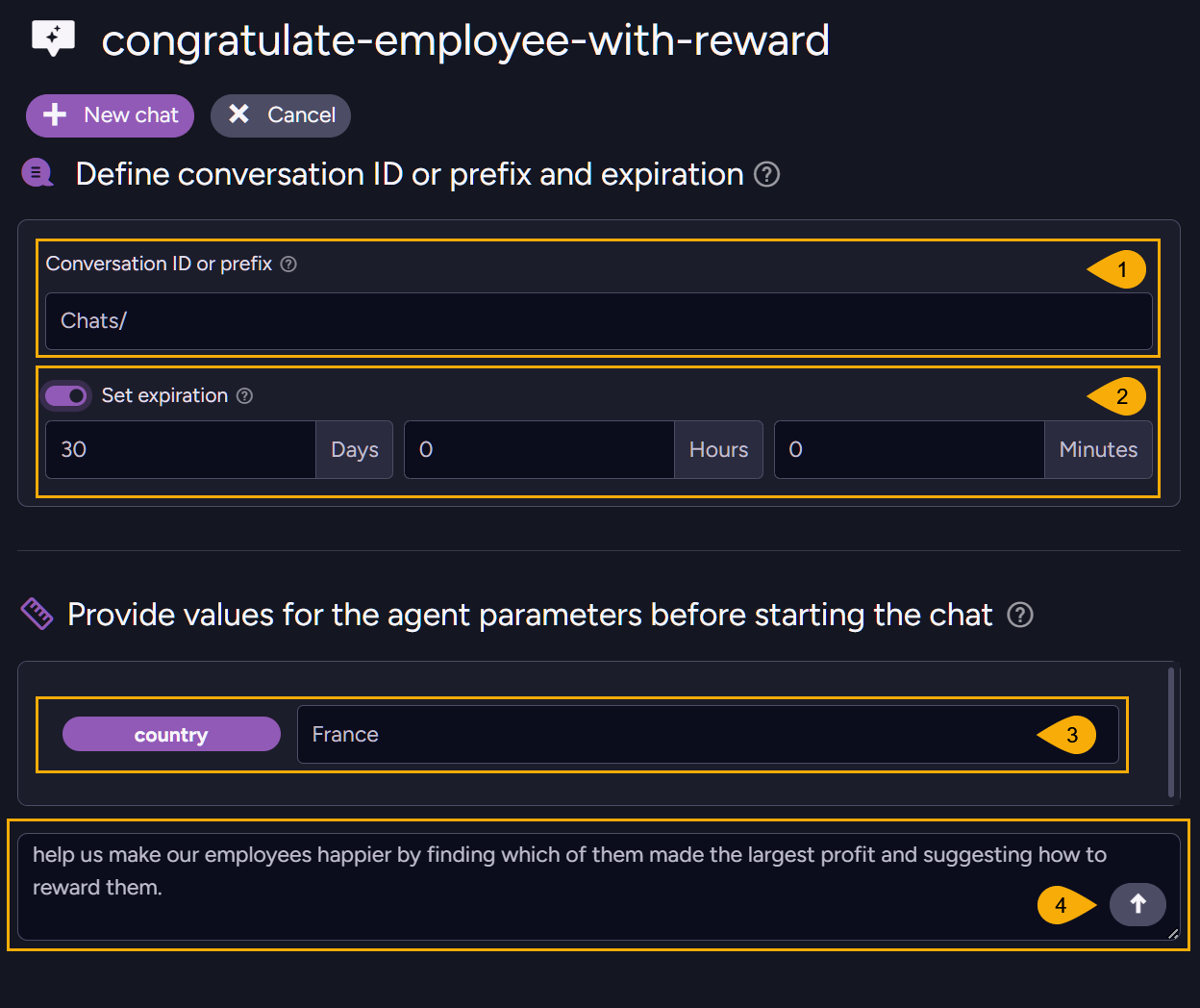

Start new chat

Starting a new chat will open the chat window, where you can provide values for the parameters you defined for this agent and enter a user prompt that explains the agent what you expect from this conversation.

-

Conversation ID or prefix

- Entering a prefix (e.g.

Chats/) will start a new conversation, with the prefix preceding an automatically-created conversation ID. - Entering the ID of a conversation that doesn't exist will start a new conversation as well.

- Entering the ID of an existing conversation will send its history to the LLM and allow you to continue where you left off.

- Entering a prefix (e.g.

-

Set expiration

Enable this option and set an expiration period to automatically delete conversations from the@Conversationscollection when their age exceeds the set period. -

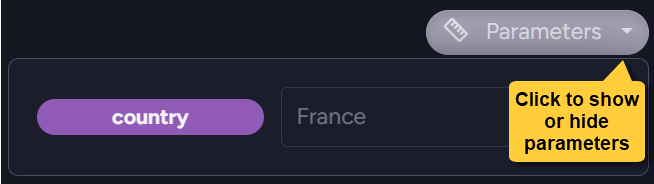

Agent parameters

Enter a value for each parameter defined in the agent configuration.

The LLM will embed these values in query tools RQL queries where you included agent parameters.

E.g., If you enterFrancehere as the value forCountry, a query tool'sfrom "Orders" where ShipTo.Country == $countryRQL query will be executed asfrom "Orders" where ShipTo.Country == "France". -

User prompt

Use the user prompt to explain the agent your expectations from it in this conversation.

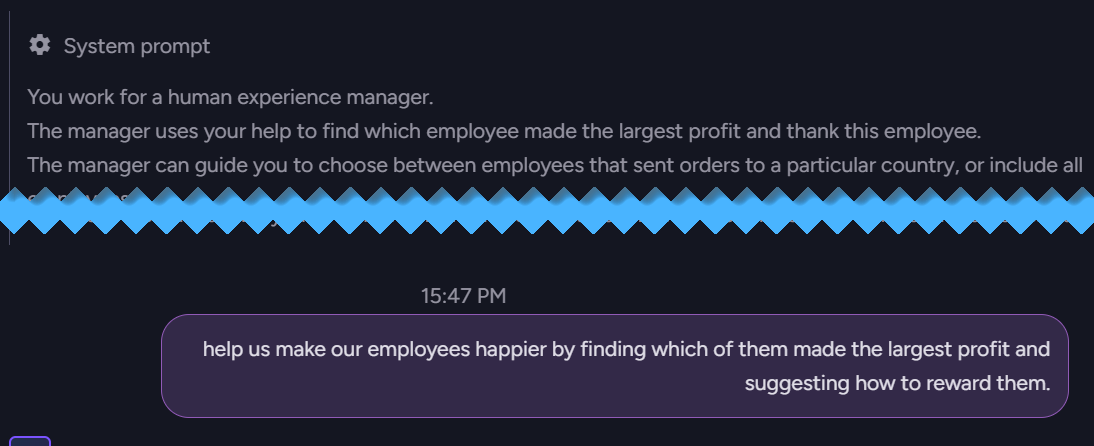

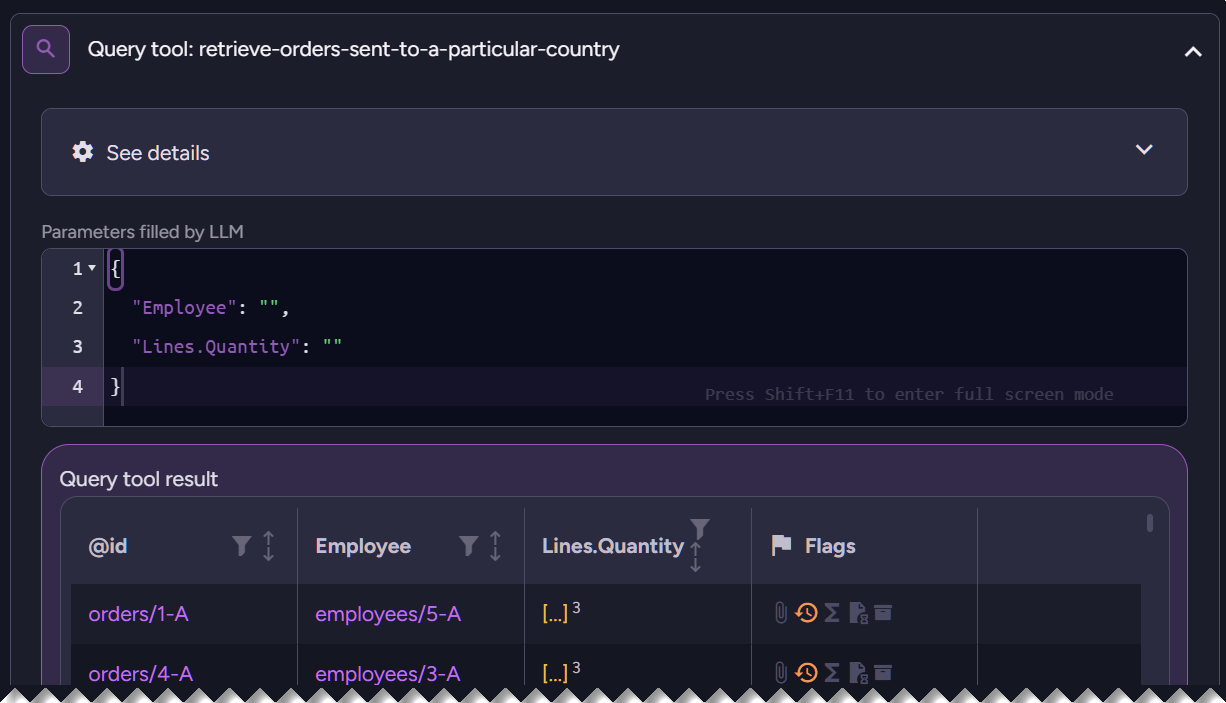

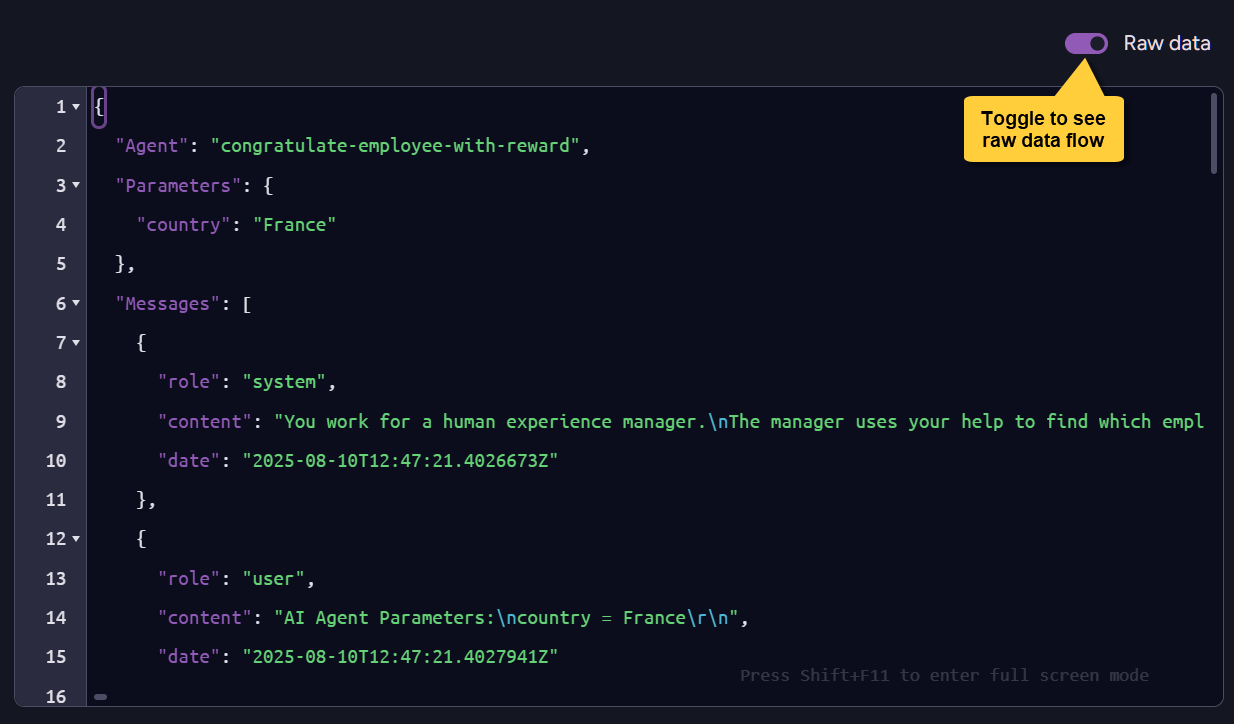

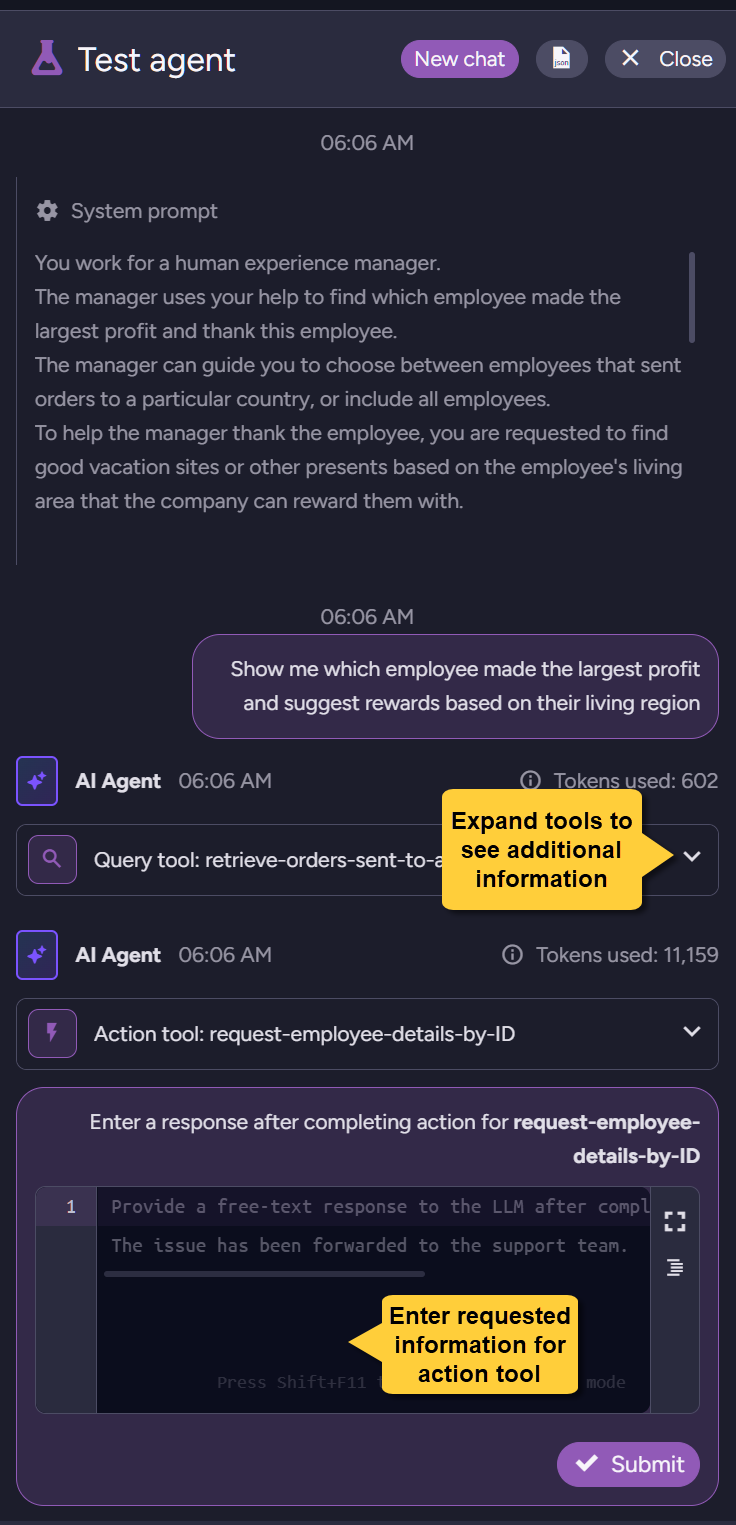

Agent interaction

Running the agent presents its components and interactions.

Agent parameters and their values:

The system prompt set for the LLM and the user prompt:

The query tools and their activity:

You can view the raw data of the agent's activity in JSON form as well:

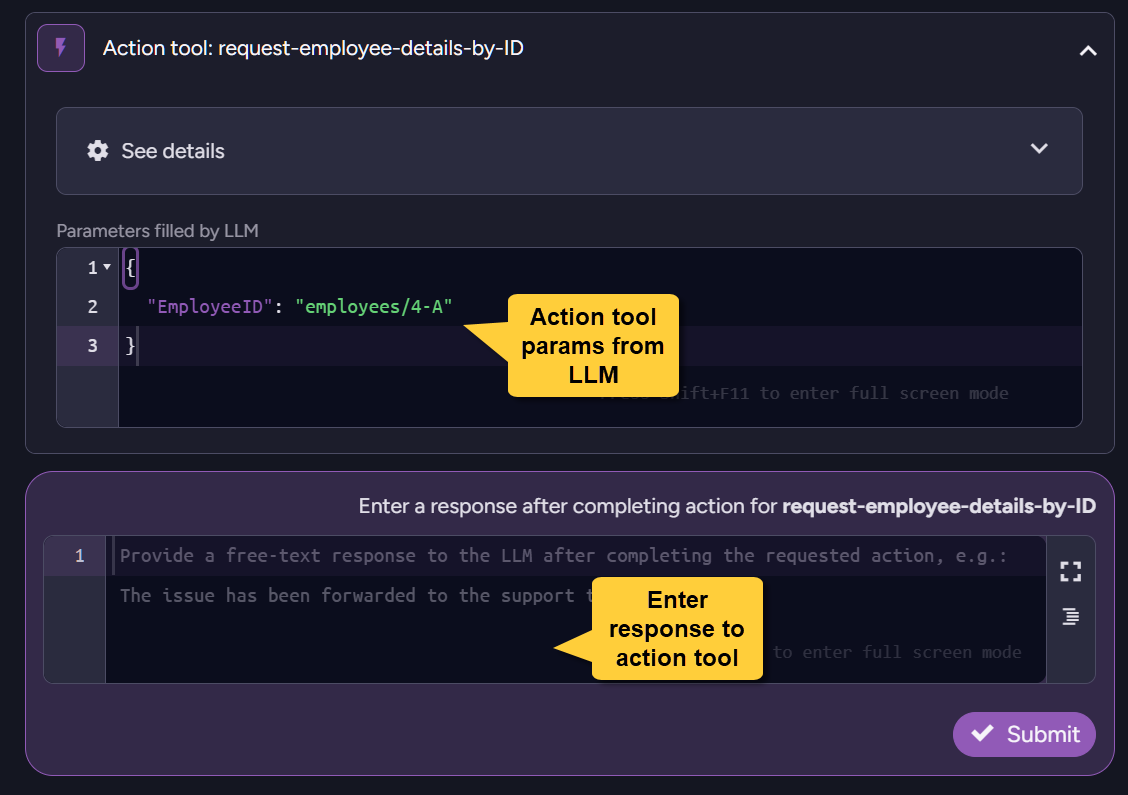

Action tool dialog

If the agent runs action tools, you will be given a dialog that shows you the information provided by the LLM when it requests the action, and a dialog inviting you to enter the results of the action when you finish performing it.

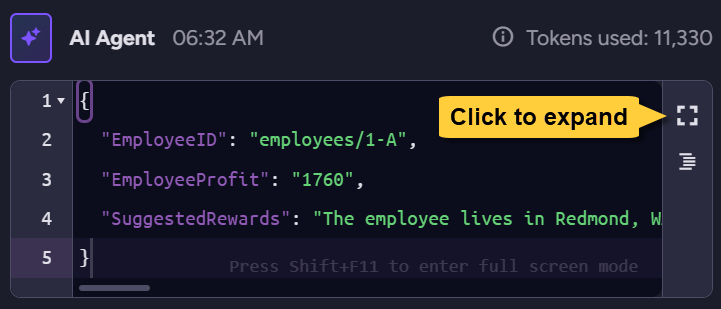

Agent results

And finally, when the AI model finishes its work and negotiations, you will be able to see its response. As with all other dialog boxes, you can expand the view to see the content or minimize it to see it in its context.

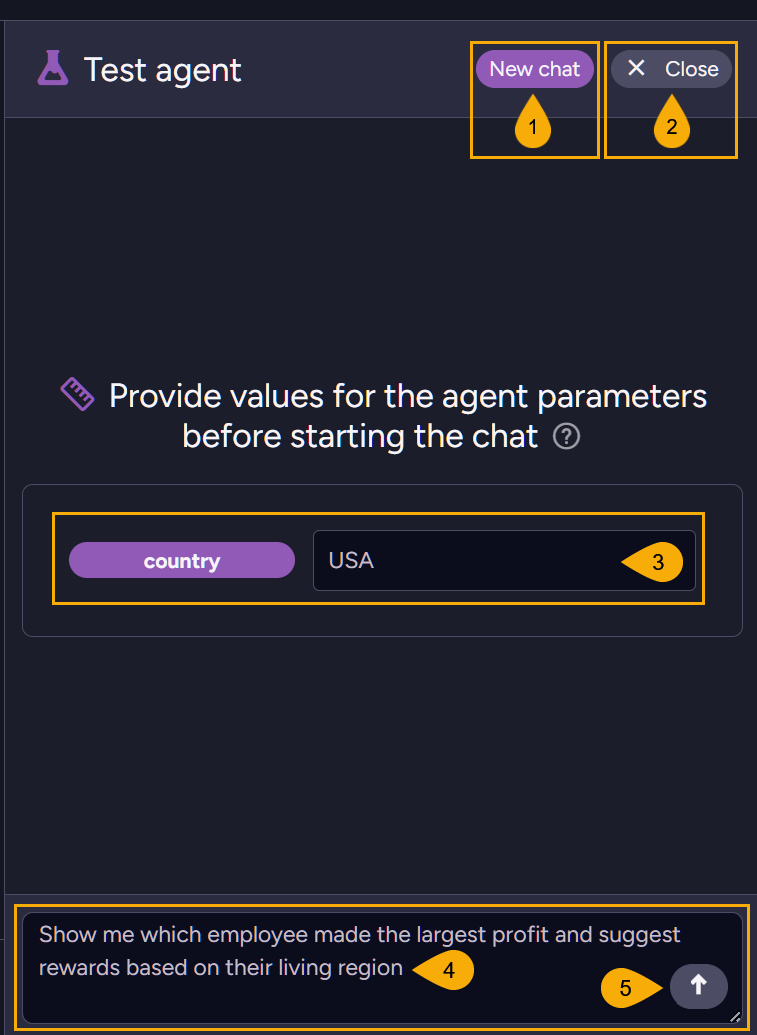

Test your agent

You can test your agent while creating or editing it, to examine its configuration

and operability before you deploy it. The test interface resembles the one you see

when you run your agent normally via Studio, but conversationa are not kept in the

@conversations or @conversations-history.

To test your agent, click Test at the bottom of the agent configuration view.

- New Chat

Click to start a new chat - Close

Click to return to the AI Agents configuration view. - Enter parameter value

Enter a value for each parameter defined in the agent configuration.

The LLM will be able to replace these parameters with fixed values when it uses query or action tools in which these parameters are embedded. - Agent prompt

Explain the agent in natural language what this session is about. - Send prompt

Click to pass your agent your parameter values and user prompt and run the test.

You can keep sending the agent prompts and getting its replies in a continuous conversation.

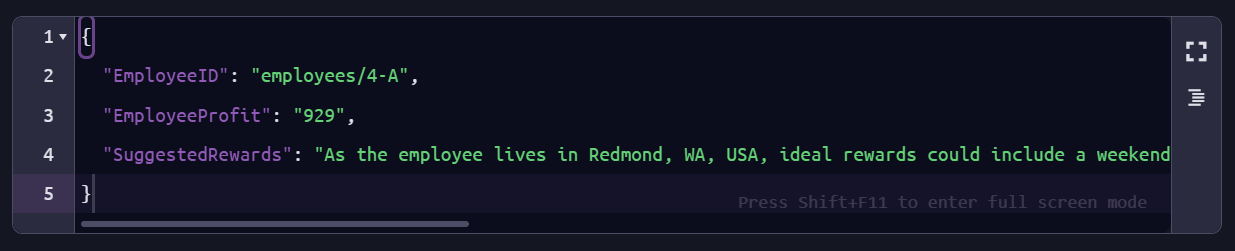

Runtime view and Test results

You will see the components that take part in the agent's run and be able to enter and send requested information for action tools. Each tool can be minimized to see it in context or expanded to view the data it carries.

When the LLM finishes processing, you will see its response.

You can expand the dialog or copy the content to see the response in detail.

{

"EmployeeID": "employees/1-A",

"EmployeeProfit": "1760",

"SuggestedRewards": "The employee lives in Redmond, WA, USA. For a special reward, consider a weekend getaway to the Pacific Northwest's scenic sites such as a stay at a luxury resort in Seattle or a relaxing wine tasting tour in Woodinville. Alternatively, you could offer gift cards for outdoor excursions in the Cascade Mountains or tickets to major cultural events in the Seattle area."

}