Ongoing Tasks: Queue ETL Overview

-

Message brokers are high-throughput, distributed messaging services that host data they receive from producer applications and serve it to consumer clients via FIFO data queue/s.

-

RavenDB takes the role of a Producer in this architecture, via ETL tasks that -

- Extract data from specified document collections.

- Transform the data to new JSON objects.

- Load the JSON objects to the message broker.

-

RavenDB wraps JSON objects as CloudEvents messages prior to loading them to the designated broker, using the CloudEvents Library.

-

Supported message brokers currently include Apache Kafka and RabbitMQ.

-

In this page:

Supported Message Brokers

Queue applications that RavenDB can currently produce data for include Apache Kafka and RabbitMQ.

-

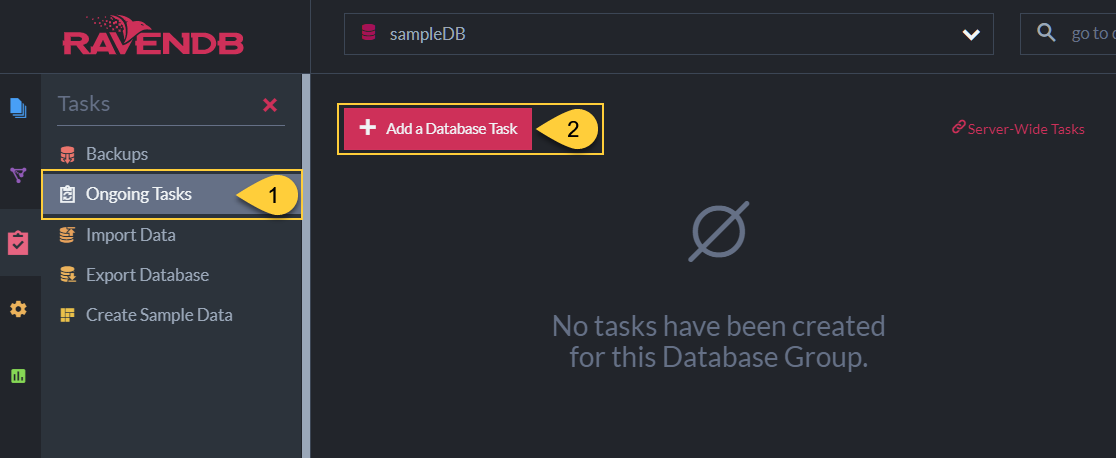

Ongoing Tasks

Click to open the ongoing tasks view. -

Add a Database Task

Click to create a new ongoing task.

-

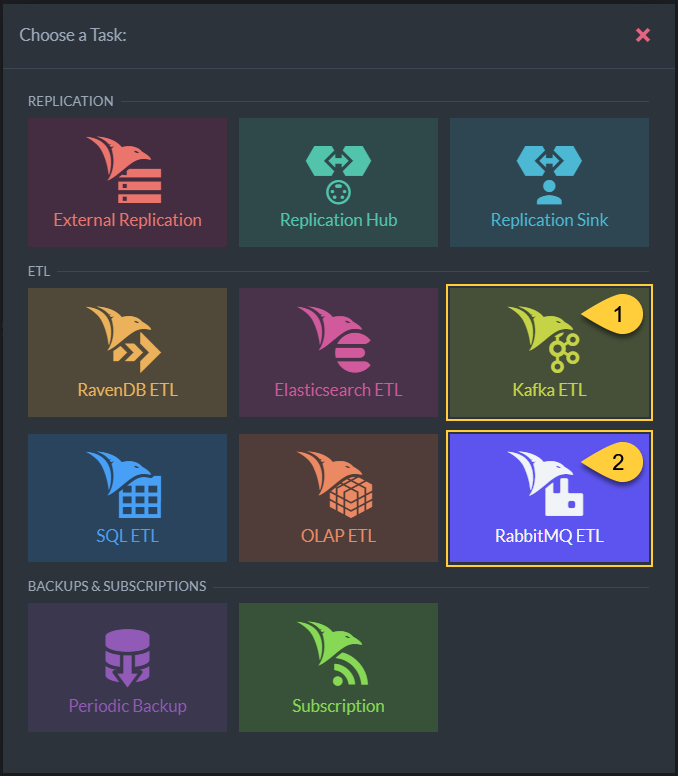

Kafka ETL

Click to define a Kafka ETL task. -

RabbitMQ ETL

Click to define a RabbitMQ ETL task.

CloudEvents

After preparing a JSON object that needs to be sent to a message broker, the ETL task wraps it as a CloudEvents message using the CloudEvents Library.

To do that, the JSON object is provided with additional required attributes, added as headers to the message, including:

| Attribute | Type | Description | Default Value |

|---|---|---|---|

| Id | string | Event Identifier | Document Change Vector |

| Type | string | Event Type | "ravendb.etl.put" |

| Source | string | Event Context | <ravendb-node-url>/<database-name>/<etl-task-name> |

Optional Attributes

The optional partitionkey attribute can also be added. It is currently only implemented by Kafka.

| Optional Attribute | Type | Description | Default Value |

|---|---|---|---|

| partitionkey | string | events relationship/grouping definition | Document ID |

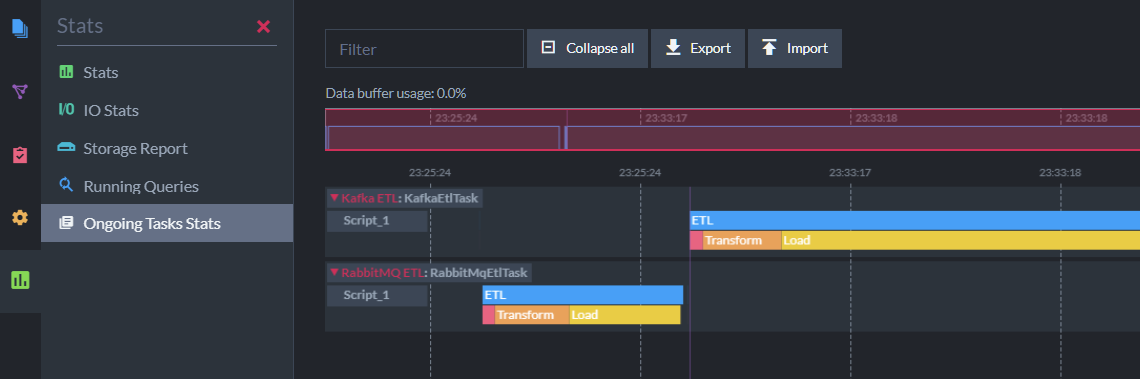

Task Statistics

Use Studio's ongoing tasks stats view to see various statistics related to data extraction, transformation, and loading to the target broker.